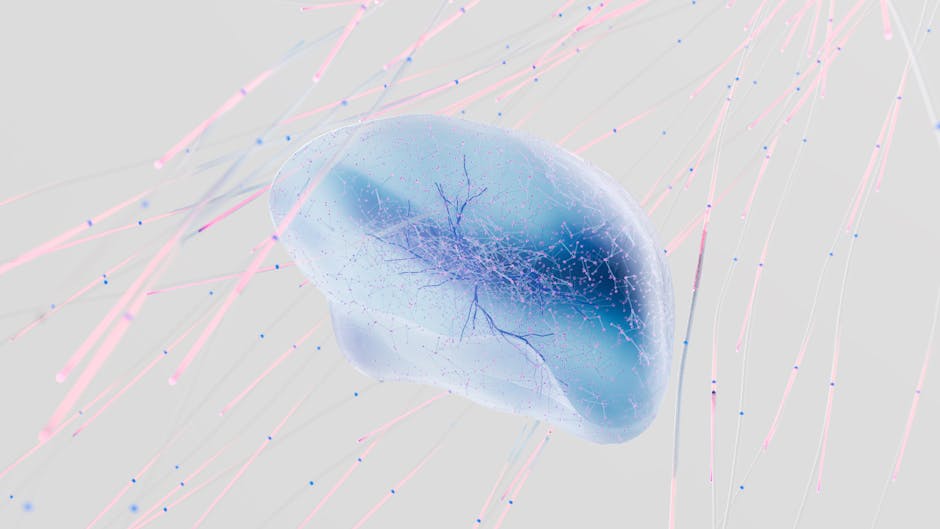

Picture this: you’re running a massive AI model that could power a small town’s electricity grid for a day. Meanwhile, the three-pound organ between your ears performs billions of calculations using roughly the same energy as a dim light bulb. This striking contrast has haunted computer scientists for decades. Until now.

A new breed of processors is emerging that doesn’t just crunch numbers faster. It thinks differently. Neuromorphic computing chips mimic the architecture of biological brains, processing complex tasks with remarkable efficiency. As AI’s appetite for power threatens to overwhelm our energy infrastructure, these brain-inspired chips offer a compelling alternative. From autonomous drones to medical devices, they’re quietly transforming how machines learn and respond to the world around us.

Decoding Neuromorphic Architecture

Traditional computers are like assembly lines.

Data moves sequentially from memory to processor and back, creating bottlenecks that waste time and energy. Your brain works nothing like this. Neurons fire asynchronously, processing and storing information in the same place, with trillions of connections adapting in real-time.

Neuromorphic chips replicate this biological elegance. IBM’s TrueNorth and Intel’s Loihi represent pioneering efforts in this space [Openpr]. Intel’s Loihi 2 chip contains approximately one million artificial neurons that communicate through spike-based signals, mimicking how brain cells transmit information. Rather than processing every piece of data continuously, these neurons only fire when something meaningful happens. Just like biological systems.

The breakthrough lies in eliminating what engineers call the von Neumann bottleneck. IBM’s TrueNorth integrates 256 million synapses directly with neurons, enabling parallel processing without the constant data shuffling that slows conventional computers. Think of it as the difference between a symphony orchestra playing together versus musicians taking turns one at a time.

Investment Surge Signals Market Confidence

The money flowing into neuromorphic computing tells a compelling story.

The market is projected to grow from $920 million in 2024 to $8.76 billion by 2033, representing a compound annual growth rate of 30.4% [Cenjows]. North America currently leads with 38% market share, though competition is intensifying globally [Cenjows].

Tech giants aren’t just watching from the sidelines. Intel and IBM have dedicated neuromorphic divisions [Constable], while startups like BrainChip and SynSense have secured substantial funding rounds. Government initiatives add further momentum. The EU’s Human Brain Project and DARPA’s SyNAPSE program have collectively invested over a billion dollars in neuromorphic research infrastructure.

Cloud providers are lowering barriers to entry. Intel’s Neuromorphic Research Cloud now provides access to Loihi systems for researchers and enterprises testing edge AI applications. This democratization of access could accelerate adoption significantly, allowing developers to experiment without massive hardware investments.

Real Applications Transforming Industries

Beyond laboratory demonstrations, neuromorphic chips are solving real problems.

Consider autonomous drones navigating cluttered warehouses or dense forests. Traditional vision processors consume several watts and struggle with real-time obstacle detection. SynSense’s Speck chip enables palm-sized drones to navigate using just 20 milliwatts, roughly 100 times more energy-efficient than conventional approaches [Constable].

Healthcare applications are equally promising. Wearable devices equipped with neuromorphic processors can continuously analyze ECG and EEG signals locally, without draining batteries or requiring constant cloud connectivity. For patients with heart conditions or epilepsy, this means reliable monitoring that lasts weeks instead of hours.

Smart factories represent another frontier. Neuromorphic sensors detect equipment anomalies in microseconds, enabling predictive maintenance that prevents costly breakdowns. The efficiency gains matter: these systems consume a fraction of the power required by conventional IoT sensors, making large-scale deployment economically viable.

Energy Efficiency Changes Everything

Here’s a sobering reality: training a single large AI model can emit over 600,000 pounds of CO2.

As AI proliferates, this environmental footprint becomes unsustainable. Neuromorphic chips offer a different path.

IBM’s neuromorphic chip has demonstrated 280 times better energy efficiency than GPUs for image recognition tasks, completing operations in just 20 milliwatts. For battery-powered devices, this translates to months of operation instead of hours. For data centers, it could mean reducing AI inference costs by up to 90%.

The economic implications extend beyond electricity bills. Companies facing sustainability mandates can deploy AI capabilities without compromising environmental commitments. Remote sensors in agriculture, wildlife monitoring, or infrastructure inspection become practical when they can operate for years on small batteries. Edge computing becomes truly viable when you can process data where it’s generated rather than shipping it to distant servers.

The Road Ahead

Despite the promise, neuromorphic computing faces real hurdles.

Developers need to learn entirely new programming paradigms. Spiking neural networks operate fundamentally differently from the TensorFlow and PyTorch frameworks that dominate machine learning today. It’s like asking web developers to suddenly write assembly code.

Standardization efforts are helping. Open-source frameworks like Intel’s Lava SDK are lowering entry barriers, while industry groups work on common interfaces. Major firms showcased neuromorphic prototypes with enhanced energy efficiency and on-chip learning capabilities in late 2025 [Cenjows], signaling accelerating progress.

Realistic timelines are emerging. Niche applications in edge AI and specialized sensors will likely dominate through 2026, with broader enterprise adoption accelerating between 2027 and 2030 as tools mature. The technology won’t replace GPUs for training massive language models. But it could revolutionize how we deploy AI in the physical world.

Neuromorphic computing represents more than incremental improvement. It’s a fundamental rethinking of how machines process information. By mimicking the brain’s architecture rather than just its outputs, these chips offer efficiency gains that could solve AI’s growing energy crisis while enabling applications impossible with traditional hardware.

For those curious about this emerging field, Intel’s Neuromorphic Research Cloud offers accessible entry points for experimentation. The future of computing may not be measured in raw speed, but in how intelligently machines can think and how little energy they need to do it.

Photo by

Photo by  Photo by

Photo by  Photo by

Photo by  Photo by

Photo by  Photo by

Photo by