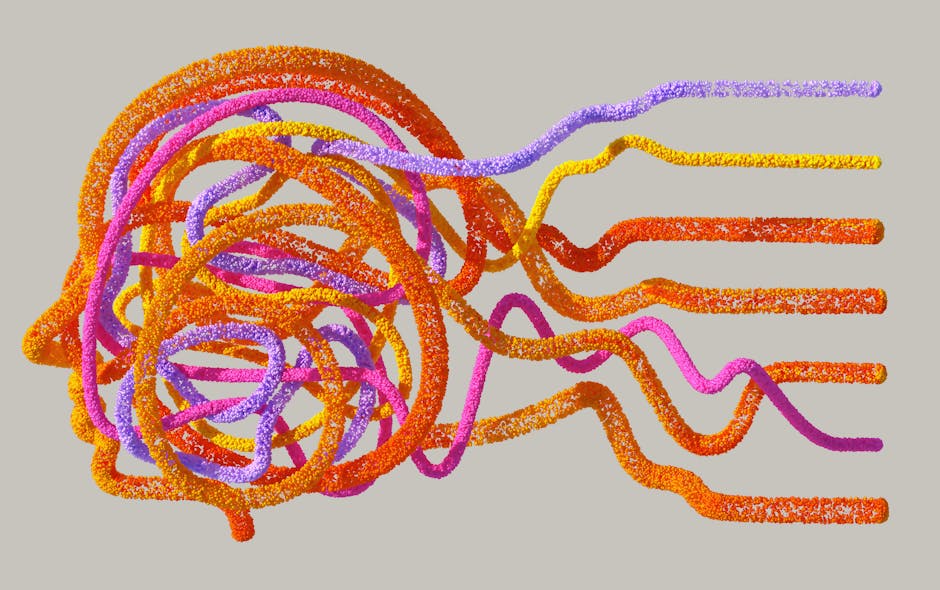

Picture a photocopier copying its own copies. Each generation gets fuzzier and more distorted until the original becomes unrecognizable noise. Something eerily similar is happening to artificial intelligence right now.

AI systems are eating their own output. As machine-generated content floods the internet, the next generation of models increasingly trains on data created by the previous generation. Researchers call this endpoint model collapse. The AI industry faces an uncomfortable truth: synthetic data pollution is degrading model performance, and authentic human data may be the most valuable resource of the AI era.

The Synthetic Data Feedback Loop

Here’s the cycle: An AI model generates text, images, or code.

That content gets published online. Web scrapers collect it for training datasets. A new model learns from it. That model produces more content. Repeat.

Each iteration sounds harmless. But the math is unforgiving. Every training cycle amplifies existing biases while reducing output diversity [Icis]. Think of an echo chamber that gets smaller with each bounce. The range of possible outputs shrinks while errors get louder.

Human creativity is messy, unpredictable, and wonderfully inconsistent. We make typos that become slang. We break grammatical rules for emphasis. We combine ideas in ways that don’t quite make logical sense but somehow work. Synthetic data lacks this beautiful chaos. When models learn primarily from other models, they converge toward a homogenized average. Technically correct but increasingly bland and predictable.

The consequences of this convergence are already measurable in research labs worldwide.

Model Collapse and Performance Decay

The term “model collapse” sounds dramatic because the phenomenon is dramatic.

Research published in Nature demonstrated that indiscriminate training on recursively generated data can lead to outright nonsense [Qat]. Not just slightly worse performance. Complete degradation into meaningless output.

From a statistical perspective, this collapse appears inevitable when training solely on synthetic data [Qat]. The mechanism works like genetic inbreeding: rare but important information disappears first. Edge cases vanish. Nuanced understanding erodes. What remains is a model that handles common scenarios acceptably but fails catastrophically on anything unusual.

Particularly troubling is the finding that even small fractions of synthetic data can degrade performance unless carefully controlled [Qat]. You don’t need a training set that’s 100% synthetic to trigger problems. Contamination at much lower levels can initiate the decay process.

Larger models face an especially cruel irony. Research shows that for bigger systems, self-consuming loops lead to faster performance decline compared to their smaller counterparts [Qat]. The very scale that makes these models powerful also accelerates their degradation when fed synthetic data.

Once collapse begins, recovery without fresh human data injection becomes nearly impossible. The errors compound, the gaps widen, and the model’s understanding of the world grows increasingly distorted.

Internet Content Pollution Rising Fast

The internet is transforming beneath our feet.

Estimates suggest that AI-generated content could constitute 50-70% of new online material by 2025. Social media posts, news articles, product reviews, forum discussions. Synthetic text is flooding every corner of the web.

This creates a detection nightmare. Current tools for identifying AI-generated content consistently lag behind generation capabilities. By the time a detector catches up to one model’s output, three newer models have emerged with different fingerprints. Web scrapers building training datasets cannot reliably distinguish human from machine.

The asymmetry is stark: generating synthetic content is cheap and fast. Verifying authenticity is expensive and slow. Every day, the ratio of synthetic to human content shifts further toward the artificial, making the problem exponentially harder to solve.

Real Data Becomes Premium Resource

In this contaminated landscape, authentic human-generated data has become digital gold.

Companies are now paying premium prices for verified human-created datasets with clear provenance. Data marketplaces report dramatic price increases for certified pre-2022 content. Material created before the current generation of AI tools flooded the internet. The logic is simple: older content has a higher probability of being genuinely human.

Pre-AI era archives are being treated like strategic reserves. Historical content libraries, academic databases, and human interaction logs from before the synthetic flood are attracting exclusive licensing deals from leading AI labs. Organizations that preserved clean data before the contamination began now hold assets of extraordinary value.

The economics of AI development are inverting. Compute power, once the primary constraint, is becoming commoditized. Data quality is emerging as the true bottleneck.

Breaking the Cannibalism Cycle

Escaping the synthetic trap requires action on several fronts.

Content authentication offers one path forward. Technologies like C2PA standards can cryptographically verify content origin and modification history. Blockchain-based provenance tracking could create an auditable chain of custody for human-created content. These systems don’t prevent synthetic content from existing. They simply make authenticity verifiable.

Curated repositories represent another strategy. Leading labs are building internal “clean data” reserves, isolated from web scraping pipelines and subject to strict quality controls. Regular authenticity audits ensure these reserves remain uncontaminated. It’s expensive, but the alternative is worse: training on increasingly polluted data.

For developers building AI systems, practical guidelines are emerging. Best practices suggest keeping synthetic data below 20% of training sets to prevent collapse. Human verification checkpoints in training pipelines can catch contamination before it causes damage. The key is intentionality. Knowing exactly what’s in your training data rather than blindly scraping the web.

Without external mitigation, the process leads inevitably to model collapse [Unite]. The good news is that mitigation is possible. The bad news is that it requires effort, investment, and a fundamental shift in how we think about data.

AI’s synthetic data cannibalism isn’t a distant theoretical concern. It’s happening now, with measurable effects on model quality. The recursive training loop amplifies biases, erodes diversity, and can ultimately produce systems that generate nonsense.

The solution requires treating authentic human data as the precious resource it has become. Provenance tracking, curated repositories, and careful pipeline management can break the feedback loop. For anyone building or deploying AI systems, evaluating training data for synthetic contamination should be a priority before degradation becomes irreversible.

In an age of infinite synthetic content, human authenticity has become technology’s most valuable and increasingly endangered resource.

Photo by

Photo by  Photo by

Photo by  Photo by

Photo by  Photo by

Photo by  Photo by

Photo by